llama.cpp Engine

What is llama.cpp?

Section titled “What is llama.cpp?”llama.cpp is the core inference engine that powers Jan’s ability to run AI models locally on your computer. Created by Georgi Gerganov, it’s designed to run large language models efficiently on consumer hardware without requiring specialized AI accelerators or cloud connections.

Key benefits:

- Run models entirely offline after download

- Use your existing hardware (CPU, GPU, or Apple Silicon)

- Complete privacy - conversations never leave your device

- No API costs or subscription fees

Accessing Engine Settings

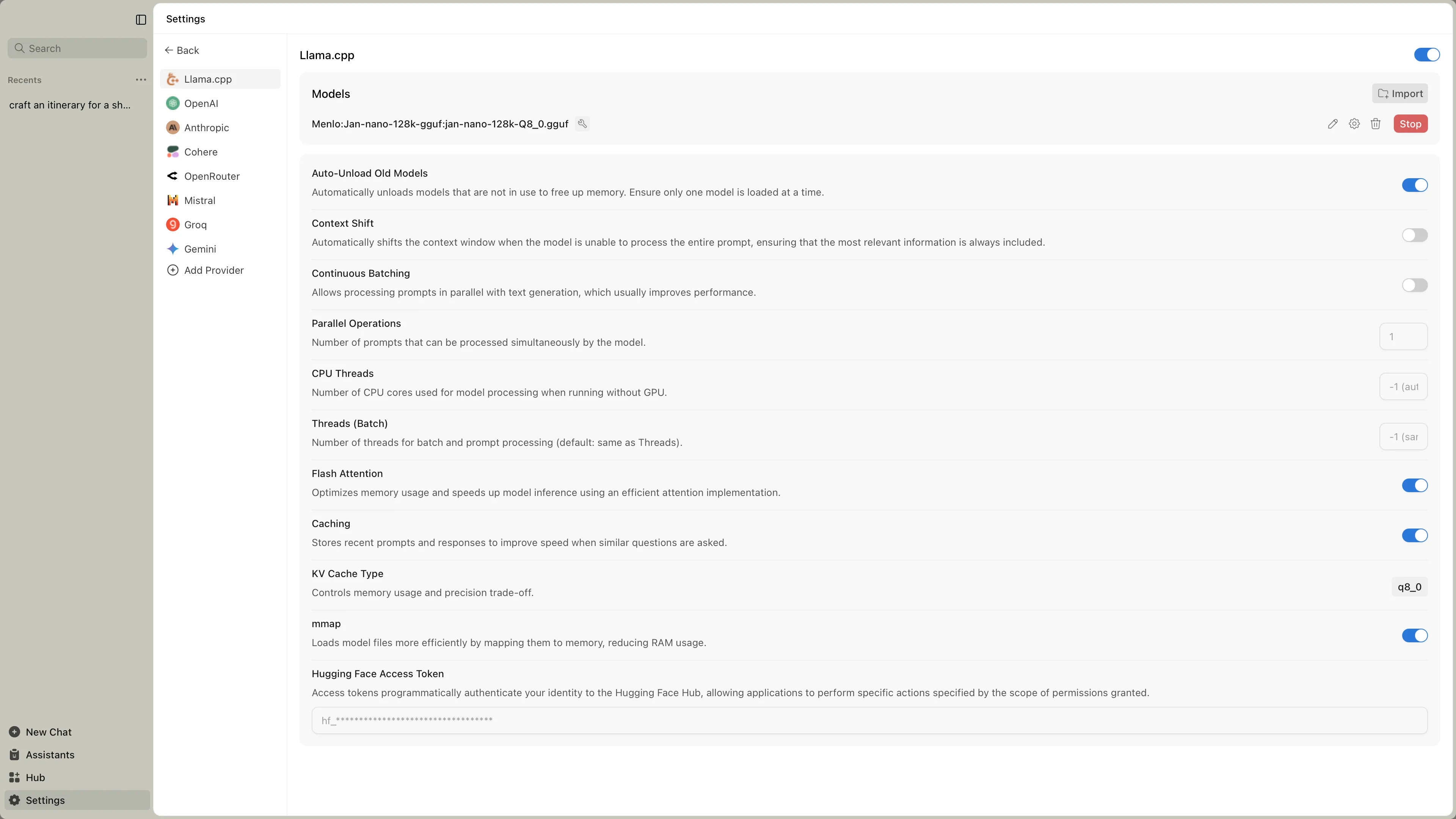

Section titled “Accessing Engine Settings”Navigate to Settings > Model Providers > Llama.cpp:

Engine Management

Section titled “Engine Management”| Feature | What It Does | When to Use |

|---|---|---|

| Engine Version | Shows current llama.cpp version | Check when models require newer engine |

| Check Updates | Downloads latest engine | Update for new model support or bug fixes |

| Backend Selection | Choose hardware-optimized version | After hardware changes or performance issues |

Selecting the Right Backend

Section titled “Selecting the Right Backend”Different backends are optimized for specific hardware. Choose the one that matches your system:

NVIDIA Graphics Cards

Section titled “NVIDIA Graphics Cards”Check your CUDA version in NVIDIA Control Panel, then select:

CUDA 12.0 (Most Common):

llama.cpp-avx2-cuda-12-0- Modern CPUs with AVX2llama.cpp-avx512-cuda-12-0- Newer Intel/AMD CPUs with AVX512llama.cpp-avx-cuda-12-0- Older CPUs without AVX2

CUDA 11.7 (Older Drivers):

llama.cpp-avx2-cuda-11-7- Modern CPUsllama.cpp-avx-cuda-11-7- Older CPUs

CPU Only

Section titled “CPU Only”llama.cpp-avx2- Most modern CPUs (2013+)llama.cpp-avx512- High-end Intel/AMD CPUsllama.cpp-avx- Older CPUs (2011-2013)llama.cpp-noavx- Very old CPUs (pre-2011)

AMD/Intel Graphics

Section titled “AMD/Intel Graphics”llama.cpp-vulkan- AMD Radeon, Intel Arc, Intel integrated

Apple Silicon (M1/M2/M3/M4)

Section titled “Apple Silicon (M1/M2/M3/M4)”llama.cpp-mac-arm64- Automatically uses GPU acceleration via Metal

Intel Macs

Section titled “Intel Macs”llama.cpp-mac-amd64- CPU-only processing

NVIDIA Graphics Cards

Section titled “NVIDIA Graphics Cards”llama.cpp-avx2-cuda-12-0- CUDA 12.0+ with modern CPUllama.cpp-avx2-cuda-11-7- CUDA 11.7+ with modern CPU

CPU Only

Section titled “CPU Only”llama.cpp-avx2- x86_64 modern CPUsllama.cpp-avx512- High-end Intel/AMD CPUsllama.cpp-arm64- ARM processors (Raspberry Pi, etc.)

AMD/Intel Graphics

Section titled “AMD/Intel Graphics”llama.cpp-vulkan- Open-source GPU acceleration

Performance Settings

Section titled “Performance Settings”Configure how the engine processes requests:

Core Performance

Section titled “Core Performance”| Setting | What It Does | Default | When to Adjust |

|---|---|---|---|

| Auto-update engine | Automatically updates llama.cpp to latest version | Enabled | Disable if you need version stability |

| Auto-Unload Old Models | Frees memory by unloading unused models | Disabled | Enable if switching between many models |

| Threads | CPU cores for text generation (-1 = all cores) | -1 | Reduce if you need CPU for other tasks |

| Threads (Batch) | CPU cores for batch processing | -1 | Usually matches Threads setting |

| Context Shift | Removes old text to fit new text in memory | Disabled | Enable for very long conversations |

| Max Tokens to Predict | Maximum response length (-1 = unlimited) | -1 | Set a limit to control response size |

Simple Analogy: Think of threads like workers in a factory. More workers (threads) means faster production, but if you need workers elsewhere (other programs), you might want to limit how many the factory uses.

Batch Processing

Section titled “Batch Processing”| Setting | What It Does | Default | When to Adjust |

|---|---|---|---|

| Batch Size | Logical batch size for prompt processing | 2048 | Lower if you have memory issues |

| uBatch Size | Physical batch size for hardware | 512 | Match your GPU’s capabilities |

| Continuous Batching | Process multiple requests at once | Enabled | Keep enabled for efficiency |

Simple Analogy: Batch size is like the size of a delivery truck. A bigger truck (batch) can carry more packages (tokens) at once, but needs a bigger garage (memory) and more fuel (processing power).

Multi-GPU Settings

Section titled “Multi-GPU Settings”| Setting | What It Does | Default | When to Adjust |

|---|---|---|---|

| GPU Split Mode | How to divide model across GPUs | Layer | Change only with multiple GPUs |

| Main GPU Index | Primary GPU for processing | 0 | Select different GPU if needed |

When to tweak: Only adjust if you have multiple GPUs and want to optimize how the model is distributed across them.

Memory Configuration

Section titled “Memory Configuration”Control how models use system and GPU memory:

Memory Management

Section titled “Memory Management”| Setting | What It Does | Default | When to Adjust |

|---|---|---|---|

| Flash Attention | Optimized memory usage for attention | Enabled | Disable only if having stability issues |

| Disable mmap | Turn off memory-mapped file loading | Disabled | Enable if experiencing crashes |

| MLock | Lock model in RAM (no swap to disk) | Disabled | Enable if you have plenty of RAM |

| Disable KV Offload | Keep conversation memory on CPU | Disabled | Enable if GPU memory is limited |

Simple Analogy: Think of your computer’s memory like a desk workspace:

- mmap is like keeping reference books open to specific pages (efficient)

- mlock is like gluing papers to your desk so they can’t fall off (uses more space but faster access)

- Flash Attention is like using sticky notes instead of full pages (saves space)

KV Cache Configuration

Section titled “KV Cache Configuration”| Setting | What It Does | Options | When to Adjust |

|---|---|---|---|

| KV Cache K Type | Precision for “keys” in memory | f16, q8_0, q4_0 | Lower precision saves memory |

| KV Cache V Type | Precision for “values” in memory | f16, q8_0, q4_0 | Lower precision saves memory |

| KV Cache Defragmentation Threshold | When to reorganize memory (0.1 = 10% fragmented) | 0.1 | Increase if seeing memory errors |

Memory Precision Guide:

- f16 (default): Full quality, uses most memory - like HD video

- q8_0: Good quality, moderate memory - like standard video

- q4_0: Acceptable quality, least memory - like compressed video

When to adjust: Start with f16. If you run out of memory, try q8_0. Only use q4_0 if absolutely necessary.

Advanced Settings

Section titled “Advanced Settings”RoPE (Rotary Position Embeddings)

Section titled “RoPE (Rotary Position Embeddings)”| Setting | What It Does | Default | When to Adjust |

|---|---|---|---|

| RoPE Scaling Method | How to extend context length | None | For contexts beyond model’s training |

| RoPE Scale Factor | Context extension multiplier | 1 | Increase for longer contexts |

| RoPE Frequency Base | Base frequency (0 = auto) | 0 | Leave at 0 unless specified |

| RoPE Frequency Scale Factor | Frequency adjustment | 1 | Advanced users only |

Simple Analogy: RoPE is like the model’s sense of position in a conversation. Imagine reading a book:

- Normal: You remember where you are on the page

- RoPE Scaling: Like using a magnifying glass to fit more words on the same page

- Scaling too much can make the text (context) blurry (less accurate)

When to use: Only adjust if you need conversations longer than the model’s default context length and understand the quality tradeoffs.

Mirostat Sampling

Section titled “Mirostat Sampling”| Setting | What It Does | Default | When to Adjust |

|---|---|---|---|

| Mirostat Mode | Alternative text generation method | Disabled | Try for more consistent output |

| Mirostat Learning Rate | How quickly it adapts (eta) | 0.1 | Lower = more stable |

| Mirostat Target Entropy | Target randomness (tau) | 5 | Lower = more focused |

Simple Analogy: Mirostat is like cruise control for text generation:

- Regular sampling: You manually control speed (randomness) with temperature

- Mirostat: Automatically adjusts to maintain consistent “speed” (perplexity)

- Target Entropy: Your desired cruising speed

- Learning Rate: How quickly the cruise control adjusts

When to use: Enable Mirostat if you find regular temperature settings produce inconsistent results. Start with defaults and adjust tau (3-7 range) for different styles.

Structured Output

Section titled “Structured Output”| Setting | What It Does | Default | When to Adjust |

|---|---|---|---|

| Grammar File | BNF grammar to constrain output | None | For specific output formats |

| JSON Schema File | JSON schema to enforce structure | None | For JSON responses |

Simple Analogy: These are like templates or forms the model must fill out:

- Grammar: Like Mad Libs - the model can only put words in specific places

- JSON Schema: Like a tax form - specific fields must be filled with specific types of data

When to use: Only when you need guaranteed structured output (like JSON for an API). Most users won’t need these.

Quick Optimization Guide

Section titled “Quick Optimization Guide”For Best Performance

Section titled “For Best Performance”- Enable: Flash Attention, Continuous Batching

- Set Threads: -1 (use all CPU cores)

- Batch Size: Keep defaults (2048/512)

For Limited Memory

Section titled “For Limited Memory”- Enable: Auto-Unload Models, Flash Attention

- KV Cache: Set both to q8_0 or q4_0

- Reduce: Batch Size to 512/128

For Long Conversations

Section titled “For Long Conversations”- Enable: Context Shift

- Consider: RoPE scaling (with quality tradeoffs)

- Monitor: Memory usage in System Monitor

For Multiple Models

Section titled “For Multiple Models”- Enable: Auto-Unload Old Models

- Disable: MLock (saves RAM)

- Use: Default memory settings

Troubleshooting Settings

Section titled “Troubleshooting Settings”Model crashes or errors:

- Disable mmap

- Reduce Batch Size

- Switch KV Cache to q8_0

Out of memory:

- Enable Auto-Unload

- Reduce KV Cache precision

- Lower Batch Size

Slow performance:

- Check Threads = -1

- Enable Flash Attention

- Verify GPU backend is active

Inconsistent output:

- Try Mirostat mode

- Adjust temperature in model settings

- Check if Context Shift is needed

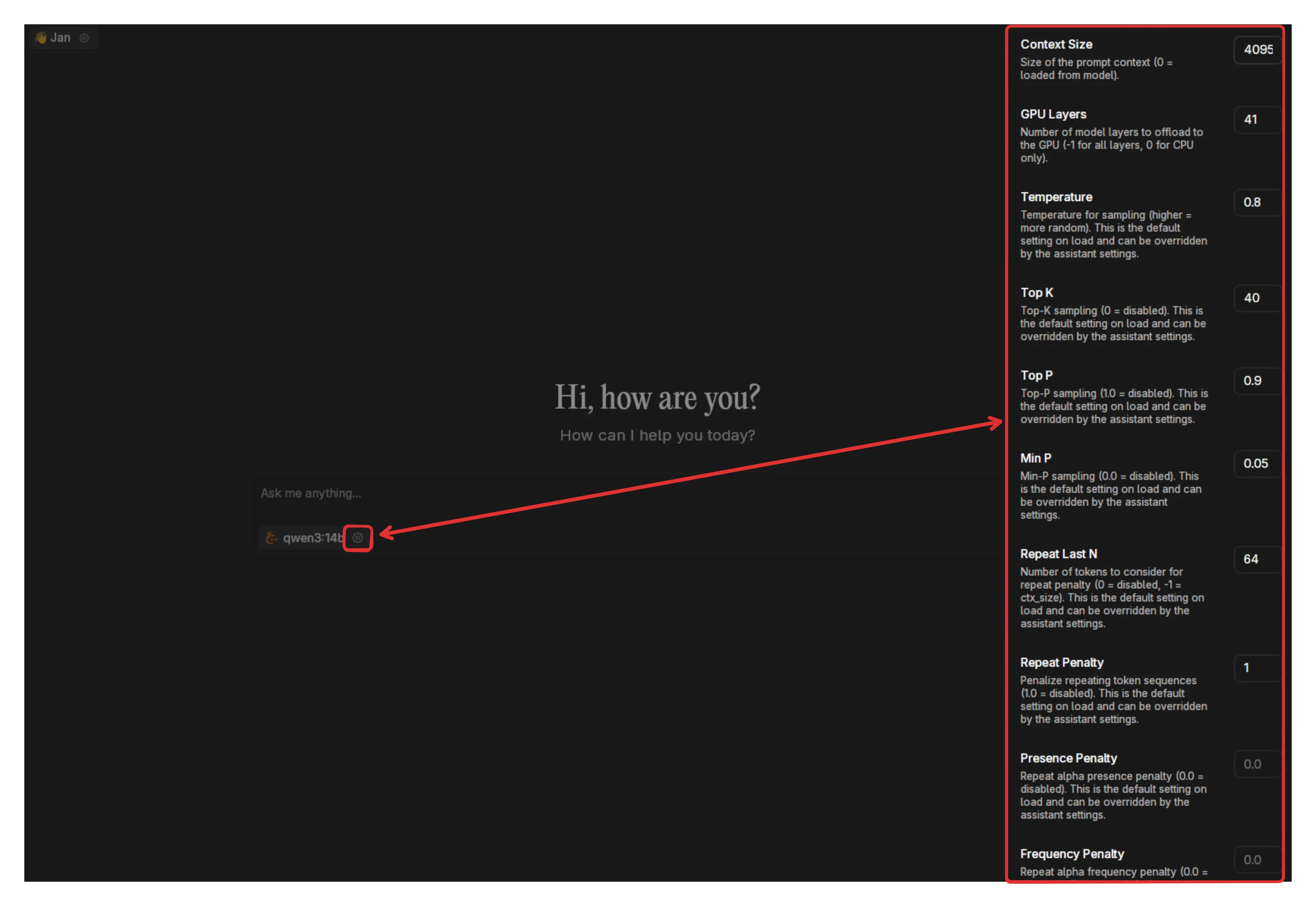

Model-Specific Settings

Section titled “Model-Specific Settings”Each model can override engine defaults. Access via the gear icon next to any model:

| Setting | What It Controls | Impact |

|---|---|---|

| Context Length | Conversation history size | Higher = more memory usage |

| GPU Layers | Model layers on GPU | Higher = faster but more VRAM |

| Temperature | Response randomness | 0.1 = focused, 1.0 = creative |

| Top P | Token selection pool | Lower = more focused responses |

Troubleshooting

Section titled “Troubleshooting”Models Won’t Load

Section titled “Models Won’t Load”- Wrong backend: Try CPU-only backend first (

avx2oravx) - Insufficient memory: Check RAM/VRAM requirements

- Outdated engine: Update to latest version

- Corrupted download: Re-download the model

Slow Performance

Section titled “Slow Performance”- No GPU acceleration: Verify correct CUDA/Vulkan backend

- Too few GPU layers: Increase in model settings

- CPU bottleneck: Check thread count matches cores

- Memory swapping: Reduce context size or use smaller model

Out of Memory

Section titled “Out of Memory”- Reduce quality: Switch KV Cache to q8_0 or q4_0

- Lower context: Decrease context length in model settings

- Fewer layers: Reduce GPU layers

- Smaller model: Use quantized versions (Q4 vs Q8)

Crashes or Instability

Section titled “Crashes or Instability”- Backend mismatch: Use more stable variant (avx vs avx2)

- Driver issues: Update GPU drivers

- Overheating: Monitor temperatures, improve cooling

- Power limits: Check PSU capacity for high-end GPUs

Performance Benchmarks

Section titled “Performance Benchmarks”Typical performance with different configurations:

| Hardware | Model Size | Backend | Tokens/sec |

|---|---|---|---|

| RTX 4090 | 7B Q4 | CUDA 12 | 80-120 |

| RTX 3070 | 7B Q4 | CUDA 12 | 40-60 |

| M2 Pro | 7B Q4 | Metal | 30-50 |

| Ryzen 9 | 7B Q4 | AVX2 | 10-20 |

Advanced Configuration

Section titled “Advanced Configuration”Custom Compilation

Section titled “Custom Compilation”For maximum performance, compile llama.cpp for your specific hardware:

# Clone and build with specific optimizationsgit clone https://github.com/ggerganov/llama.cppcd llama.cpp

# Examples for different systemsmake LLAMA_CUDA=1 # NVIDIA GPUsmake LLAMA_METAL=1 # Apple Siliconmake LLAMA_VULKAN=1 # AMD/Intel GPUsEnvironment Variables

Section titled “Environment Variables”Fine-tune behavior with environment variables:

# Force specific GPUexport CUDA_VISIBLE_DEVICES=0

# Thread tuningexport OMP_NUM_THREADS=8

# Memory limitsexport GGML_CUDA_NO_PINNED=1Best Practices

Section titled “Best Practices”For Beginners:

- Use default settings

- Start with smaller models (3-7B parameters)

- Enable GPU acceleration if available

For Power Users:

- Match backend to hardware precisely

- Tune memory settings for your VRAM

- Experiment with parallel slots for multi-tasking

For Developers:

- Enable verbose logging for debugging

- Use consistent settings across deployments

- Monitor resource usage during inference

Related Resources

Section titled “Related Resources”- Model Parameters Guide - Fine-tune model behavior

- Troubleshooting Guide - Detailed problem-solving

- Hardware Requirements - System specifications

- API Server Settings - Configure the local API